2018 was a great year for Tech at Hiring Hub. (Strictly speaking the stuff I’m going to talk about started back in October 2017 but that wouldn’t work quite as well for a title 😉.)

As a small team we’ve achieved a lot.. Business value is always the critical thing for us here in the tech team but my colleague and our CTO, Anna Dick, has already spoken about that in her year round up. As Lead Engineer I’ll be focussing more on the technical side of things.

In summary we’ve….

- Upgraded and migrated our production and test infrastructure

- Fixed up a whole tonne of technical debt

- Prepared for and implemented strategies for GDPR compliance such as enforcing data retention and encryption

- Upgraded to the latest and greatest versions of our underlying tech stack

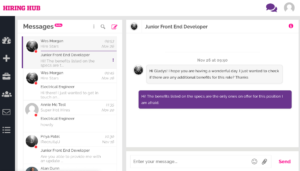

- Started to work with Action Cable to provide real-time features

- Started a journey to adopt up to date front-end technologies with the likes of Webpacker, React and ES6

- Started a journey to move over to a container-based infrastructure

Infrastructure

Towards the end of 2017 we encountered some issues with our then hosting provider, encountering some significant down time with our managed database service that caused us a lot of pain.

Knowing that 2018 would see significantly more change and demand on our infrastructure, we made the decision to invest early and move to a platform that could offer both the stability, flexibility and elasticity that we required.

Being a small team, it was important that this solution was as “turnkey” and as “hands-off” as possible.

We assessed both the cost and time investments required for the big cloud providers but were put off by the amount of knowledge (and therefore time investment) required to setup and (maintain) what we felt should be a relatively simple setup. In addition, our infrastructure for most of 2018 would be fairly static, in that we didn’t need the vast amounts of elasticity offered by the big name cloud providers, which comes with a premium.

Having previously had a great experience and finding them to be very competitive we settled with Linode as a hosting provider. Linode’s offering is pretty simple compared to the likes of AWS: VM’s (or Linodes, as they call them) of varying sizes at a fixed monthly cost. Simple to understand, simple to estimate costs, and easy to scale.

To get the managed “hands-off” solution, we paired Linode with cloud66’s rails service, a kind of managed DevOps layer, allowing us to spin up and scale environments with relative ease. This actually works with a whole host of cloud providers from Linode and Digital Ocean to Amazon and Google or even your own on-premise infrastructure. We’ve found them to be an absolute delight to work with and very supportive, their CEO was even kind enough to take the time to speak to us about their approach to data security and how they were preparing themselves for GDPR.

Moving forward into 2019 with a “cloud native architecture”

It’s fair to say that we have probably reached the limits of our current setup and a priority for 2019 is to move to (yes you guessed it) a containerised infrastructure.

The reasons for this are all the usual “abilities” – flexibility, reliability, scalability. We need to be able to spin up new test environments with ease, ideally getting to the point that each branch we push can have its own test environment, allowing for easier demoing and testing without waiting for a test environment to become available (commonly known as “review apps”).

We also want to be running with as much parity as possible, so ideally we are developing against the same container images that will be promoted to a test environment and ultimately be deployed to production, reducing the risks of any nasty surprises as our software makes its way out into the wild. All the while we want to have the best in class tools to auto-scale and manage our resources to scale as we need.

We’ve started this journey and hope to be rolling out new container-based test environments by Q3 of 2019. We are currently assessing different cloud providers for this but we are hoping to go with something that is ultimately vendor-neutral so the setup will likely be based around kubernetes and spinnaker.

Technical Debt and Version Upgrades

It’s fair to say that at the start of 2018 Hiring Hub had its fair share of technical debt.

Hiring Hub’s journey started back in 2011 with an MVP that worked well to prove a concept. The main codebase started back in the days of Rails 3 and has gone through various upgrades over the years.

With a dedicated Tech team on board, it was time to start maturing the platform and tackle some of the early stage technical debt we’d acquired.

Tackling the Debt

Due its age, the codebase has encountered varying technologies and approaches to problems as patterns and technologies fell in and out of favour.

In addition, numerous developers have worked on the project, each adding their own unique “flair” and different approaches. With all of this we’ve been careful not to fall into the pattern of rewriting large swathes of code, simply because we didn’t feel it was the ideal approach. At the end of the day this code works and gets the job done for our customers. We’ve been pragmatic in making minimal changes for the features we are working on.

That said, there have been instances where we’ve had to uproot core parts of the system. An example can be found in a couple of our models that contain what can only be sensibly described as a state machine. Instead of using one of the commonly available and widely used state machine libraries, these models had been implemented with lots of custom methods, status columns and case statements and it was broken, causing our customers real pain. We’d tried to nip and tuck around the edges but the sensible thing to do here was add a proper state machine. Luckily we were blessed with good tests that helped us move with confidence. We added the commonly used aasm (acts as state machine) gem to implement an explicit state machine, making the implementation much simpler, more robust and less error prone.

Upgrading the stack

Between the onslaught of feature development and day to day business as usual tasks we’ve managed to make some dramatic jumps and upgrades to our applications underlying technology.

We’ve been able to upgrade to the latest version of Rails and adopt technologies like Webpacker, Action Cable and React.

Unfortunately we also had some dependencies that had become abandonware. Our previous admin system was built using the Typus gem which was not compatible with the latest version of rails. This was preventing us from being able to upgrade to a Rails version greater than 4.2 and therefore preventing us from adopting the latest features like Action Cable which we required to build our new real-time messaging features. We had no choice other than to rebuild a new admin portal and drop the old one entirely. We did this incrementally, building out the new admin portal side-by-side with the old one. We trialled them both, until we were confident that our customer support team had everything they needed in the new admin portal.

We used ActiveAdmin, which has been a pleasure to use and has provided us with many opportunities to further enhance the admin portal beyond its previous capabilities.

Once we had removed the last major impediment to upgrading past Rails 4.2, the rest of the process was mostly just a technical exercise in understanding how the various rails APIs had changed and making code changes as appropriate. Again, good test coverage was essential to supporting this process in addition to a couple of rounds of thorough manual testing.

Action Cable

Adopting Action Cable had its challenges. Beyond getting to grips with how it works from a programming standpoint, we also had some infrastructure-related challenges, we even managed to momentarily DDoS ourselves in the process! This was due to some misconfiguration in our nginx config, which essentially meant that all available connections were being consumed when we went live with our Action Cable features. We decided to isolate Action Cable to its own set of servers to prevent the possibility of a repeat. This means that the application can run and people can still get stuff done even if there is an issue with the real-time features that Action Cable provides.

Overall our experience with Action Cable has been a positive one and we have many features in the pipeline where we can use it to make almost every aspect of the platform as real-time as possible.

Getting on board with React and the new-skool front-end tech!

As a team of predominantly Rails developers that could just about manage to cobble together a bit of jQuery to get the job done, this has perhaps been our biggest challenge and achievement.

I can’t commend the team enough for the effort they’ve put in (a lot in their own free time) to up-skill in this area.

It is perhaps an understatement to say that the JS eco-system is complex. At times its bewildering! But it’s also impressive: the rate of change and innovation going on in the last few years has been extraordinary and as a Ruby developer looking in from the sidelines I know I’ve not been able to keep up.

We’ve successfully been able to start using React in many parts of the site. We started with something low key and low risk and now we’re starting to replace major functionality of the site with React components. Everything from our menus to our shiny new jobs “kanban” boards are being built in React.

But we’ve also been careful not to bite off too much. We are mindful of the tooling and developments going on in the community, but being a small team we like to keep things simple and easy to understand.

A good example of this is with Redux. We’d received mixed advice as to whether we should use it: some people say definitely do, some say to keep away as much as possible. So, we’ve delayed using anything as complicated until it’s really needed, and in the meantime (although we are not fans of “rolling our own”) one of our developers, Tim, has been able to apply his learning to knock together a simple to understand Redux-esque state management system without the bells and whistles (which we accept might need to be replaced with actual Redux at some point).

The point being is that we’ve kept complexity to a minimum and focussed our resources on proving out new features whilst still advancing our stack at a sustainable pace.

What we didn’t do…

We didn’t adopt microservices. Instead we learned to love the monolith we have and avoid the additional complication of managing inter-service communication. At least for the time being were relying on good OOP practices to properly divide and conquer our codebase.

We may use more of a services-like architecture in the future when the time is right to do so and an evented architecture (using Kafka or something like it) certainly feels like a good fit for the direction we are heading.

Though for now, after lengthy discussions we’ve decided to stick with (and love) our monolith right now. We feel we can deliver more value to the business this way, we are small and nimble enough not to have to draw up such concrete divisions in our codebase and we don’t have the communication overheads of larger teams where defining strict integration contracts is beneficial.

Wrapping Up

2018 has certainly been a success for tech at Hiring Hub, we’ve been able to achieve a lot with a small team at a sustainable pace. The feedback from customers has been really positive and the team are excited to push the product and our tech forward throughout 2019, to continue to build the best in class recruitment marketplace.

Interested in joining our team